Tech Talk

What is CXL? (Computer Express Link)

Last updated 12 July 2021

The challenges driving CXL

We hear more and more about the demand from things like artificial intelligence, machine learning and high-performance computing within the datacentre.

And it’s such demand that is driving new standards today, much like Compute Express Link – better known as ‘CXL’.

There is a need to keep up with this demand and CXL will do so as we move forward.

CXL will continue to allow the sharing of resources in a very intimate way through disaggregation of the workload from the CPU to the accelerators.

From a memory point-of-view, there is a real need for increased memory capacity and bandwidth due to such demands.

Simply put, more data means more need to feed the data and store the data.

So how does it work?

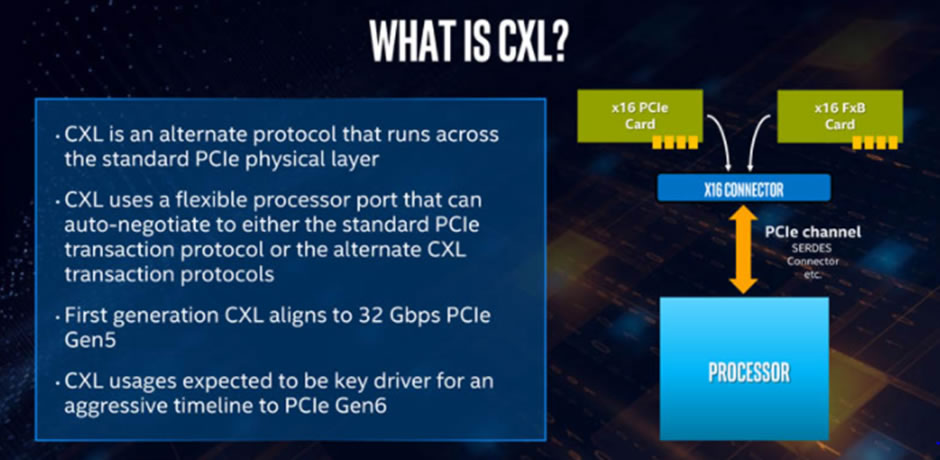

CXL is an interconnect specification that is built upon PCIe lanes.

It provides an interconnect between the CPU and RAM. Built on PCIe 5.0 and electrical components, CXL can achieve 32bn data transfers per second, or up to 128GB/s using 16 lanes.

CXL maintains memory coherency between the CPU memory space and memory on attached devices.

(Figure from Intel, 2019)

What can it bring?

In a nutshell, CXL is a way of extending current architectures beyond the traditional server box.

What do we think the benefits are going to be?

Traditionally, being locked into specific memory types and limited DRAM memory channels has prevented flexibility within the datacentre. However, implementing CXL protocol will allow the following benefits as we try to keep up pace with increase data demand:

- More memory bandwidth or capacity beyond what the host processor’s native DDR memory, with low-latency expansion between the native memory and any memory attached on the CXL device

- Decrease the cost of such solutions by right-sizing capacity of memory for targeted application workloads

- The separation of components into pools, built on-demand to match specific functions and needs of varied workload. All leading to greater performance and efficiency of that specific setup and its requirements.

What can we expect the future to look like?

As we move towards the end of 2021, expect the announcement of memory modules and drives based on the CXL standard, bringing together both PCIe 5.0 and DDR5 to cater for those demanding workloads and needs in the datacentre.

CXL memory will undoubtedly expand the use of memory to a whole new level, and we expect key partners Micron and Intel, who are part of the CXL consortium, to spearhead new technologies as they begin to go-to-market.