Tech Talk

What is HBM (High Bandwidth Memory)?

Last updated 5 February 2024

Modern day computing is demanding. The applications of today mean the computing tasks become more complex and strenuous, requiring faster and more efficient access to data.

To enable this, new technologies and new innovations come along. It's the same with memory technologies - even with the release of DDR5 - they have limited bandwidth which can bottleneck the performance of processors, graphic cards and other devices. And then came along HBM - or High Bandwidth Memory.

What is HBM?

High Bandwidth Memory (HBM) is an emerging type of computer memory that is designed to provide both high-bandwidth and low power consumption. Typically, it will be suited and used in high-performance computing applications where that data speed is required.

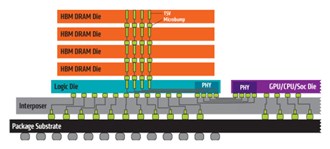

HBM uses a 3D stacking technology. This enables various layers of chips to be stacked on top of each other, by vertical channels called TSVs (through-silicon vias). This allows for a larger number of memory chips to be packed into a smaller space, reducing the distance that data needs to travel between the memory and processor.

HBM Specifications

| HBM | HBM2/HBM2E (Now) | HBM3 (Next) | |

| Max Pin Transfer Rate | 1 Gbps | 3.2 Gbps | Unknown |

| Max Capacity | 4GB | 24GB | 64GB |

| Max Bandwidth | 128 GBps | 410 GBps | 512 GBps |

What are its key advantages?

Here are the many advantages HBM holds over traditional technologies:-

- More bandwidth: The main one - as per the name! HBM provides a much higher bandwidth, allowing faster data transfer between the memory and the processor. Results? Well this is improved performance and faster processing times for those complex computing tasks we mentioned in our opening.

- Lower power consumption: Its simply more power efficient. By reducing the amount of power needed to transfer data between memory and processor, HBM can help extend battery life and reduce the energy consumption, with clear sustainability benefits for this exact reasoning.

- Improved Thermal Management: Leading on from the above, in relation HBM can help reduce the amount of head generated by a memory system. This takes the overall system performance and reliability to another level. Why? HBM is really designed to operate at lower voltages, resulting in less heat being generated.

- Higher capacity: Store more data and process it at once with HBM. When it comes to server based workloads like machine learning and AI, which require large amounts of data processing - this will be the future. The same for more personal things like video rendering and editing.

- Smaller form factor: As everything grows, we are looking for ways to reduce footprint. In using a 3D stacking technology, HBM enables multiple layers of memory chips to be stacked on top of each other, thus reducing footprint. Important for the likes of smartphones and notebooks.

High Bandwidth Memory right now

A variety of semi-conductors are using HBM in their products, none more so that Micron - a key vendor partner of Simms.

Powering AI and HPC, Micron - read this excellent technical brief from the semi-conductor about how they're integrating and operating HBM2E.